On Analytic Candy

Sport Analytics has had an explosion in popularity in the past two decades. And in the past decade, as we’ve entered the “big data” era of sport analytics, we’ve seen a proliferation of garbage metrics: analytic candy.

Analytic candy is to analytics, what candy is to your diet. It’s seductive. It’s fun. It offers you nothing useful and if you consume too much of it, you’ll be worse off.

Analytic candy sounds like this:

Tyreek Hill has the fastest recorded play in the NFL this year at 22.01 MPH

Jalen Hurts has a 70% winning percentage

LeBron James scored 24.5 points per game in the playoffs last year

Shohei Ohtani led the league in Slugging Percentage and was tied for 3rd in Shutouts

These stats compelling. Tyreek Hill is fast. Jalen Hurts has won a lot. LeBron James can still score. Shohei Ohtani hits and pitches well.

But none of this helps us understand the games being played.

How much does Tyreek’s speed help his team? How much is Jalen’s winning actually attributable to his own play? Is LeBron’s scoring an efficient use of possessions? How valuable does Shohei’s two-way expertise make him?

The stats above don’t answer any of those questions.

Spotting Analytic Candy

So, we know it’s bad for our diet—but how do we avoid it? It’s not like analytic candy is labeled with bright colors and cartoon characters. Instead, look for a few tell tale signs of analytic candy:

Stat spewing

Not opinionated about the sport

“Advanced” metrics or data

“Conditional” data

Stat spewing is when a commentator will list a number of stats really quickly, in an attempt to communicate a point. For example, in a recent article by the Athletic’s Chris Kirschner (I’ve bolded the stats spewed to communicate the point):

But back then, Stanton was one of the faster players in the league, ranking in the 70th percentile in sprint speed. This year, Stanton ranked in the fourth percentile, which means hitting infield groundballs will usually result in an out. Stanton finished this season with an awful .202 xwOBA (formulated using exit velocity, launch angle and, on certain types of batted balls, sprint speed) on all groundballs he hit. Stanton’s batting average on balls in play this season was .210 (the major league average was .307).

In this passage, Chris lists a bunch of numbers that say Giancarlos Stanton is slower, so ground balls are a worse play for him. All while Stanton is a 33-year old who hasn’t recorded a stolen base in 4 years. His speed was not under investigation. No advanced stats needed.

Stat spewing is pretty easy to identify. Whenever someone switches stats quickly, or lists off a lot of separate stats—start to ask yourself if they really have a point, or if they’re just trying to overwhelm you with numbers. Not all evidence is relevant evidence.

Which brings us to the critical one: analytics that are not opinionated about the sport they assess.

Consider two basketball analytics: usage rate and points per game. One of these we like a lot (usage rate), and one of these we don’t much care for (points per game).

Usage rate is opinionated. It was created because basketball analysts—John Hollinger, Dean Oliver—had an opinion about the game: how much of an offense a player accounts for matters. And to the extent we believe in that stance, usage rate is good guide to whether the Celtics will be the most balanced team in the league, or how do we make sense of Jordan Poole’s (expected) large numbers this season.

Points per game on the other hand, we count because it’s easy. It would be hard not to track this data in the course of faithfully capturing what’s going on in the game. And we do need scoring data to make heads or tails of basketball. But points per game—and even more useless: total points scored—is a near meaningless stat. Embiid led the league in points per game last year. But in the playoffs, the 76ers offense looked lifeless when James Harden wasn’t driving the bus.

We dismiss points per game as a stat, because we dismiss the underlying “all you need to do is score lots of points” idea. Every stat can be looked at this way.

For instance, consider the now numerous stats floating around about Travis Kelce when Taylor Swift is watching versus when she’s not. The underlying theory is that high-profile fans at a game alter player performance. We can all easily dismiss this.

We need to be faster to dismiss other unopinionated stats.

Next, we come to Advanced Metrics. Advanced Metrics, and anything billed as such, should bring caution because they will often fail the Occum’s Razor test. Occum’s Razor states that the best solution to a problem, is the one that solves it most simply.

Practitioners developing Advanced Metrics often don’t care how messy they are. Consider ESPN’s proprietary quarterback metric: QBR. ESPN claims that more than 10,000 lines of code are needed for this metric. Meanwhile, traditional passer rating is probably in the neighborhood of 5 to 10.

And how much do we want to rely on any individual metric to measure quarterback play? Especially because football is a single-elimination sport and game-to-game variance in performance is so high?

Contrast QBR with our measure of QB performance, P(100) rating. P(100) rating is the probability that a quarterback has a passer rating above 100 over a stretch of four games. It’s complex, but calculated in less than 20 lines of code. So 500x simpler than QBR. Add to that, it has a very clear opinion: a quarterback’s job is to be good enough, consistently enough to put their team in contention for a Super Bowl at the end of the year.

Anything branded as an advanced metric is likely trying to hide the ball. Or measure something that doesn’t matter. Be skeptical.

And lastly: Conditional Metrics. These are the favorite type of analytic for anti-analytic commentators to mock. And, honestly, we should be mocking them too within the analytics community. (And within this post, we already have. See our criticism of Chris’ use of data above.)

Conditional metrics are things like:

Giancarlo Stanton has a .202 wxOBA on ground balls

Russell Wilson has the highest season-long passer rating for any quarterback who was sacked 20 or more times

In conditional metrics, we’re looking at a metric that is conditioned, on something else. Often, these conditions are absurd—or irrelevant. This invokes the problem of a stat not being opinionated, or it creates statistical problems, of a sample not generalizing to an out of context analysis.

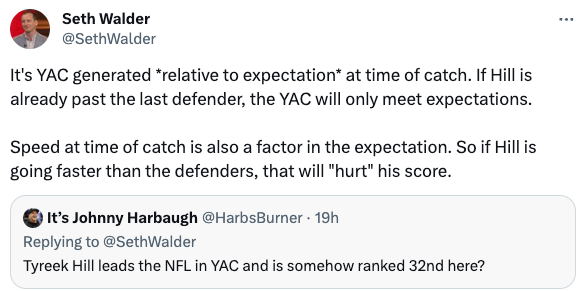

A good, recent example of conditional metrics becoming analytic candy is “Yards After the Catch Above Expectation”, defended here by Seth Walder of ESPN—who uses a lot of these “too much trees, not enough forest” metrics.

YAC+ accounts for speed at the time of reception and position on the field. So it measures… yards after broken tackles? Ability to accelerate after a catch? It’s not really clear what or why we care. And as the commenter points out—it makes the glaring omission of Tyreek Hill. It fails the smell test. Analytic Candy. Good for Seth and his employer—ESPN—but bad for anyone trying to understand football.

And, as an aside, the smell test is important! If someone comes up with a basketball metric measuring overall player skill and it doesn’t have M.J. and LeBron in the top 5, we need to be VERY skeptical.

We recently applied our Manalytics Scores, a metric for assessing the title likelihood of teams based on the MVP-quality of their best player, to individual players. And LeBron shakes out as better than anyone but Kareem—who would be the only other acceptable choice for best-player ever. M.J. is in a four-way shuffle with Bill Russell, Larry Bird, and Magic Johnson—and that is explainable. Jordan took two of his prime years off to play baseball, costing him dearly in our Manalytics Score metric.

If Jordan was 15th—we’d have a problem. Just like YAC+ has a problem placing Tyreek Hill 32nd.

Using Analytics For Good

The popularization of analytics as a technique has made stats sexy. Even people who don’t believe in analytics—or don’t take an analytics-first approach to their view of sports—will use stats to support their argument. But as the old adage goes: there are lies, damned lies, and statistics. That’s no different in sport.

To use analytics well, we should strive to identify and rely on analytics that align with our theories about how sports work. And we should have theories about how each sport works! We should have opinions on whether offense or defense is more important—or if they’re even; if starting pitching is as valuable as relief pitching; if a great big is more important than a great guard. Without these, no amount of numbers can help us think about the game.

And only with a theory of the case and analytics together can we arrive at a better understanding of what is really going on on the field.